For more than a decade, smart glasses have lived in the “almost there” category — technically impressive, socially awkward, and rarely essential to how people actually work.

That may finally change in 2026.

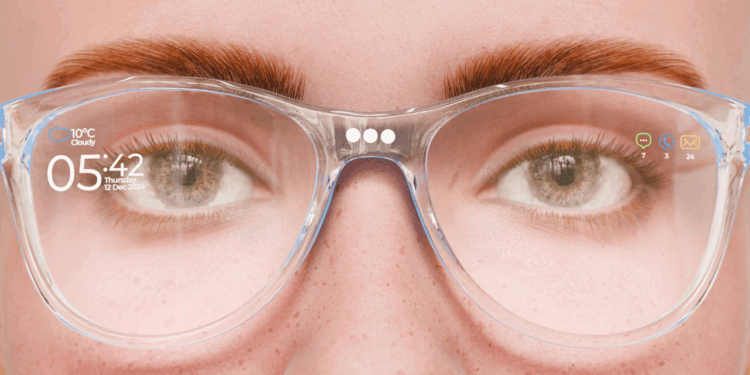

A new generation of lightweight, AI-powered smart glasses is shifting augmented reality (AR) away from novelty and toward everyday utility. Instead of putting a smartphone (or a bulky headset) on your face, the next wave of devices is designed to quietly assist, surface context, and reduce friction in real work environments…potentially.

At the center of that is Google’s return to smart glasses, this time with Gemini as the core experience.

Why smart glasses are finally lining up with work

Earlier AR attempts struggled because they asked workers to adapt to the technology. The emerging 2026 models flip that equation, because they’re built to fit into existing workflows, not replace them.

Three trends are converging:

- Ambient AI is becoming more valuable than apps. Workers don’t want more dashboards; they want answers in the moment.

- Hybrid work has fragmented attention across physical and digital spaces, increasing the need for hands-free, context-aware tools.

- Design maturity has made smart glasses socially acceptable — something you can wear all day, not just demo once.

Instead of immersive virtual worlds, the focus is now on subtle augmentation: listening, seeing, remembering, and assisting without demanding constant interaction.

Google’s second attempt — and why this time is different

Google Glass failed in part because it arrived too early and tried to do too much. Its 2026 return is far more restrained and far more strategic.

Google has confirmed that its next-generation smart glasses, developed with partners like Warby Parker and Gentle Monster, will launch in 2026 with two distinct form factors, according to Fortune.

The first are screen-free AI glasses. These look like regular eyewear but include microphones, speakers, and cameras that allow users to interact with Gemini conversationally. No floating notifications, no visible display — just an assistant that understands what you’re looking at, hears what you’re asking, and responds quietly.

For work, this could be transformative. Imagine:

- A facilities manager verbally logging issues while walking a building

- A consultant asking for background on a client the moment they walk into a meeting

- A coworking operator recalling member preferences without checking a CRM

The second category — display-enabled AI glasses — adds a lightweight in-lens display. Unlike full headsets, these are designed for quick, private visual cues: translations, directions, prompts, or step-by-step instructions that appear only when needed.

Google’s smart glasses rely on Android XR, the company’s extended reality platform, to turn Gemini AI into a practical workplace tool. At CES 2026, Android XR demos highlighted everything from spatial navigation and real-time translations to hands-free Google Maps and PC Connect, showing how AI assistance can move seamlessly across devices. By embedding Gemini in Android XR, Google positions its glasses as part of a connected ecosystem that supports mobile, frontline, and knowledge workers in real time.

For frontline, technical, and mobile workers, this kind of just-in-time visual support could replace clipboards, tablets, and constant phone checks.

Why Google could succeed where others haven’t

Meta and Apple are also betting on face-worn computing, but Google has a unique advantage in the workplace: contextual intelligence at scale.

Gemini is an AI system deeply embedded across search, email, documents, calendars, and Android devices. That matters at work. A smart assistant that knows your schedule, understands your documents, and recognizes your environment is far more useful than one that simply overlays information.

Google is also positioning its glasses as part of the broader Android XR ecosystem, not a standalone gadget. Features like PC Connect — allowing users to pull a live desktop window into their field of view — and business-focused travel modes signal a clear enterprise intent.

For flexible work environments, this is especially relevant. Smart glasses don’t require dedicated desks, large setups, or fixed infrastructure. They move with the worker, whether that’s to a coworking space, client site, home office, or airport lounge.

Smart glasses at work: what changes in 2026

If adoption accelerates as expected, 2026 could mark a turning point in how AR shows up at work as background infrastructure.

Some likely developments:

- Meetings become less screen-dependent. Glasses can surface names, roles, or agenda items without laptops open.

- Training shifts from manuals to overlays. Visual guides anchored to real equipment reduce onboarding time.

- Knowledge work becomes more mobile. Workers can capture notes, photos, and insights without stopping to type.

- Accessibility improves. Real-time captions, translations, and visual cues expand who can participate fully at work.

Crucially, these changes don’t require companies to redesign everything. Smart glasses layer intelligence onto what already exists.

Why style and discretion matter

One of Google’s most important decisions this time around is partnering with eyewear brands rather than positioning the product as a tech accessory.

Workplace tech only scales when people are comfortable wearing it. Glasses that look like glasses, not gadgets, lower social barriers and increase adoption. They also make AR viable in professional settings where conspicuous hardware can feel intrusive or inappropriate.

This emphasis on choice — screen-free versus display-enabled, fashion-forward versus utilitarian — signals that smart glasses are finally being treated as personal tools, not corporate-mandated productivity efforts.

Privacy, surveillance, and the ethics question smart glasses can’t avoid

If smart glasses are going to become everyday workplace tools, privacy concerns will move from hypothetical to unavoidable.

Always-on cameras and microphones — especially in shared offices, coworking spaces, and client environments — raise questions about consent, data ownership, and surveillance. Even if recordings aren’t stored, the perception of being watched can change behavior, collaboration, and trust at work.

There’s also the issue of contextual data creep. Glasses that recognize faces, recall past interactions, or surface private information in real time could blur lines between helpful memory aid and inappropriate insight — particularly in HR, management, or customer-facing roles.

Google appears aware of this risk. Its emphasis on screen-free modes, subtle visual cues, and user-controlled activation suggests an attempt to keep smart glasses assistive rather than extractive. But policies, norms, and clear workplace guidelines will matter as much as the hardware itself.

For smart glasses to succeed at work, companies will need to answer hard questions early: when they can be used, who controls the data, and how to protect coworkers who never opted in. Without that clarity, adoption could stall no matter how advanced the technology becomes.

The bigger picture: AR without the spectacle

The future of workplace AR won’t look like sci-fi. There will be no holograms floating in open-plan offices or virtual avatars replacing colleagues.

Instead, AR will become subtle, more selective, and more useful. Smart glasses should reduce cognitive load and support human interaction.

If Google and its competitors get this right, 2026 will be remembered as the year they stopped being strange, and perhaps even started being necessary.

Dr. Gleb Tsipursky – The Office Whisperer

Dr. Gleb Tsipursky – The Office Whisperer Nirit Cohen – WorkFutures

Nirit Cohen – WorkFutures Angela Howard – Culture Expert

Angela Howard – Culture Expert Drew Jones – Design & Innovation

Drew Jones – Design & Innovation Jonathan Price – CRE & Flex Expert

Jonathan Price – CRE & Flex Expert