- Deepfakes are used to create fake content, increasing fraud and workplace harassment risks.

- Women are disproportionately affected by deepfakes, with career and personal impacts.

- The “Take It Down Act” aims to help victims of deepfake harassment remove harmful content.

No one wants to be subject to something that they didn’t consent to, whether at work or in their personal lives.

Unfortunately, with the rise of new technologies, non-consensual acts are becoming increasingly common.

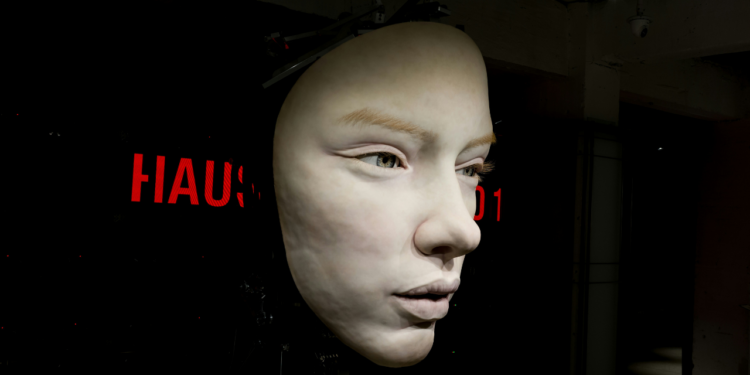

In recent years, deepfakes — hyper-realistic videos or images manipulated by artificial intelligence — have raised alarm bells across multiple industries. Deepfakes can be used to create fake videos or images with the help of AI that depict people in situations they never participated in.

Last year, a finance worker in Hong Kong was duped into transferring more than $25 million to a fraudulent account after a video conference with what appeared to be his CFO and other coworkers.

While the technology behind them has undeniably been impressive, it’s the darker side of deepfakes that’s increasingly concerning, especially when it comes to fraud and sexual harassment in the future of work.

This can be especially harmful in professional environments where reputations and careers are at stake. When these fabricated images or videos are used maliciously to target someone, the consequences can be devastating, making it harder to combat harassment claims in the workplace.

Deepfakes Are A Growing Problem in the Workplace

Workplace sexual harassment has taken many forms, but as digital tools have become more advanced, it has taken on a brand new form: image-based violence.

Imagine you’re a professional with a respected career. You’ve worked hard to get where you are. One day, you discover that someone has created a deepfake video or image of you in an inappropriate, compromising situation — an act of harassment that you never participated in or consented to.

The most horrific part is that this actually isn’t a hypothetical scenario anymore: 26 members of Congress — 25 women and 1 man — have been the victims of sexually explicit deepfakes.

In the workplace, sexual harassment can take many forms, and the introduction of deepfakes makes it even more challenging to identify and address such issues.

Victims may struggle to prove that they didn’t participate in the fabricated content, and in some cases, perpetrators can use the content to manipulate or discredit the victim, complicating efforts to achieve justice.

What makes this situation particularly dangerous is that deepfakes are very good at blurring the lines between what is real and what is fabricated. It’s easy for someone to dismiss a victim’s claims or dismiss the gravity of the situation when there’s a fake video or photo in play that can potentially damage careers and reputations.

This adds another layer to the already difficult problem of handling sexual harassment in the workplace.

Women Will suffer The Most

Sexual harassment at work is not a new problem, and it’s more pervasive than you think. 60% of women report experiencing unwanted sexual attention, sexual coercion, inappropriate behavior, or sexist remarks in the workplace.

In an AAUW survey, 38% of women who experienced harassment reported that it influenced their decision to leave a job prematurely, while 37% said it hindered their career progression.

Many women who are victims of deepfakes and image-based sexual abuse have been reported to experience trauma, post-traumatic stress disorder, anxiety, depression, a loss of control, a diminished sense of self, and challenges in trusting others.

Deepfakes used in this way will likely always impact women more than men, as women experience the majority of sexual harassment. The threat of deepfakes can also lead to increased fear and anxiety among women, particularly those who work in industries where their image or reputation is key to their career success.

A single malicious deepfake could ruin a woman’s professional standing, making it difficult to rebuild trust with colleagues or clients.

This adds a new layer of uncertainty to workplace environments, where the fear of digital manipulation can now threaten not only a woman’s personal dignity but also her livelihood.

The TAKE IT DOWN Act and the Fight for Protection

Senator Ted Cruz (R-TX) and Senator Amy Klobuchar (D-MN) are sponsoring a bill making it illegal to distribute “non-consensual intimate imagery and deepfake pornography.” Last month, First Lady Melania Trump hosted a roundtable to raise awareness about the “Take It Down Act.”

The Take It Down Act would make it easier for victims of deepfake harassment to take legal action and demand the removal of fake content, helping prevent it from spreading and potentially harming the careers of innocent people.

The idea behind the bill is to empower victims by giving them a direct means of dealing with this issue, potentially reducing the prevalence of deepfake harassment and discouraging those who might try to use this technology maliciously.

The act has been passed by the Senate, but held up in the House since December.

A New Frontier for Sexual Harassment

As companies (should) strive to create safe, respectful workplaces, they must also consider how new technologies like deepfakes can exacerbate sexual harassment in ways they haven’t fully addressed.

While laws like the Take It Down Act are a step in the right direction, it’s clear that this technology has opened up new ways to harass and control victims, especially in work environments where power dynamics and reputations already play a major role in preventing individuals from speaking up.

Employers and lawmakers need to recognize the potential for deepfakes to impact victims of workplace harassment, particularly when it comes to sexual harassment. They must continue to implement more strategies and policies that address this threat head-on.

Employers should educate their staff about the potential risks of deepfakes, encourage employees to report suspicious content, offer support to those affected by this emerging form of harassment, and should, most of all, effectively punish those who create and distribute it.

Dr. Gleb Tsipursky – The Office Whisperer

Dr. Gleb Tsipursky – The Office Whisperer Nirit Cohen – WorkFutures

Nirit Cohen – WorkFutures Angela Howard – Culture Expert

Angela Howard – Culture Expert Drew Jones – Design & Innovation

Drew Jones – Design & Innovation Jonathan Price – CRE & Flex Expert

Jonathan Price – CRE & Flex Expert